TANGO (gaze understanding)

To navigate the social world and interact with others, we use social cognition. Much research has been devoted to studying the average age at which social-cognitive abilities emerge in development. In contrast, individual-level variation has often been overlooked. However, if we want to answer research questions about the developmental sequence of social-cognitive abilities and which mechanisms subserve their development, we need studies that reliably measure individual differences. Few traditional measures (e.g., false belief change-of-location tasks) address these issues. They often rely on low trial numbers, dichotomous measures, lack satisfactory psychometric properties and are thus not designed to capture variation between children. We argue that we must consequently address the methodological limitations of existing study designs and adopt a systematic individual differences perspective on social-cognitive development.

At the moment, we are creating a new test battery to capture the development of individual differences in social cognition. We designed an interactive web app that works across devices and enables in-person and remote testing.

The web app was developed using JavaScript, HTML5, CSS, and PHP. For stimulus presentation, a scalable vector graphic (SVG) composition was parsed. This way, the composition scales according to the user’s viewport without loss of quality while keeping the aspect ratio and relative object positions constant. Furthermore, SVGs allow us to define all composite parts of the scene individually. This is needed for precisely calculating the target locations and sizes. Additionally, it makes it easy to adjust the stimuli. The web app generates two file types: (1) a text file (.json) containing metadata, trial specifications, and participants’ click responses, and (2) a video file (.webm) of the participant’s webcam recording. These files can either be sent to a server or downloaded to the local device. Personalized links can be created by passing on URL parameters. Crucial design features of all tasks include a spatial layout that allows for discrete and continuous measures of participants’ click imprecision and is easily adaptable to different study requirements.

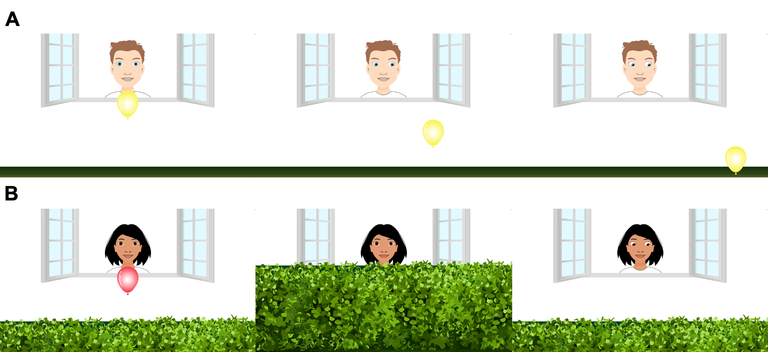

Our first task that utilizes the web implementation focuses on gaze understanding – the ability to locate and use the attentional focus of an agent. Participants are asked to locate a balloon by determining where an agent looks.

We found inter-individual variation in a child (N = 387) and an adult (N = 236) sample, and substantial developmental gains. High internal consistency and test–retest reliability underline that the captured variation is systematic.

Our second task is currently under development and focuses on children‘s ability to understand partial knowledge states. Do children understand what other agents can and cannot know? Often this question has been measured dichotomously in the past. We now include the case that agents can also have partial knowledge states. This work shows a promising way forward in studying individual differences in social cognition and will help us explore the structure and development of our core social-cognitive processes in greater detail.

Further information on our task and its implementation can be found in this preprint: https://psyarxiv.com/vghw8. The code is open-source (GitHub – ccp-eva/tango-demo), and a live demo version can be found under: tango.

Another task that uses our infrastructure is an Item Response Theory-based open receptive vocabulary task for 3- to 8-year-old children. A live demo version can be found here: oREV.