Bridging the Technological Gap Workshop - 2nd Edition

Spreading technological innovations in the study of cognition and behavior of human and non-human primates

4th August 2024 → 10th August 2024 at the MPI EVA

Pierre-Etienne Martin, PhD, Max Planck Institute for Evolutionary Anthropology, Leipzig, Germany

Laura Lewis, PhD, University of California, Berkeley, CA, USA

Hanna Schleihauf, PhD, Utrecht University, Netherlands

Introduction

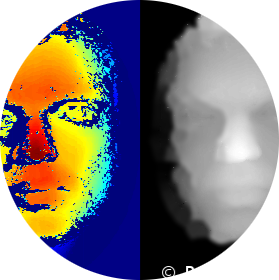

Technological breakthroughs create immense opportunities to revolutionize scientific methodologies and enable answers to questions that were previously unanswerable. Disciplines studying behavior and cognition across species are struggling to keep up with the rapid technological developments. In 2022, we organized a workshop to help bridge this technological gap. Through the workshop we (a) trained 31 early career researchers in the use of cutting-edge non-invasive technologies including motion-tracking, eye-tracking, thermal imagining, and machine learning extensions, (b) developed guidelines and common standards in the use of these methods, and (c) created an online platform with a diverse network of researchers, and (d) initiated an interdisciplinary collaboration project with most of the workshop participants involved using thermal imaging with many different species. Building on the success of the 2022 event, we plan to organize this workshop again. Our goal is to continue providing opportunities for early career researchers to gain expertise in innovative technologies, build connections between interdisciplinary and international scholars, and foster the exchange of ideas and future collaborations.

Program

Subject to modifications.

Welcoming of the participants

Sunday, August 4, 2024

| 17:00 – 21:00 | Welcome Reception at the MPI EVA with IT Help Desk |

Part I - Introduction and Computational Basics

Monday, August 5, 2024 – Intro and Building foundations

| 09:00 – 9:30 | Laura Lewis, Hanna Schleihauf & Pierre-Etienne Martin | Opening of the Workshop |

| 09:30 – 11:00 | Fumihiro Kano & Alex Hoi-Hang Chan | Keynote Importance of Tech in the study of the human and non-human animal mind |

| 11:00 – 11:30 | Coffee Break | |

| 11:30 – 12:30 | Bret Beheim | Hands-on “Let’s Git” - Intro to Git and GitHub |

| 12:30 – 13:30 | Lunch Break | |

| 13:30 – 15:00 | Luke Maurits | Hands-on Intro to R and R studio |

| 15:00 – 15:30 | Coffee Break | |

| 15:30 – 17:00 | Pierre-Etienne Martin | Hands-on Intro to Python |

| 17:30 – 20:00 | Poster Session & Snacks |

Tuesday, August 6, 2024 – Foundations of Machine Learning

Located at the Wolfgang Koehler Primate Research Centre at the Leipzig Zoo

| 09:00 – 10:30 | Arne Nix & Mohammad Bashiri | Introduction to the Basics of ML – Part 1 |

| 10:30 – 11:00 | Coffee Break | |

| 11:00 – 12:30 | Arne Nix & Mohammad Bashiri | Introduction to the Basics of ML – Part 2 |

| 12:30 – 14:00 | Lunch at Leipzig Zoo | |

| 14:00 – 15:00 | Otto Brookes | Animal Biometrics/Imageomics for Great Apes |

| 15:00 – 18:00 | Tour through the Wolfgang Koehler Primate Research Centre at the Leipzig Zoo | |

| 18:00 – 20:00 | Social Gathering in the City Center | |

Part II - Training in the use of new technologies to study the human and non-human animal mind

Wednesday, August 7, 2024 – Gaze Tracking & Pupillometry

| 09:00 – 10:00 | Maleen Thiele & Christoph Völter | Keynote |

| 10:00 – 10:30 | Coffee Break | |

| 10:30 – 12:30 | Tommaso Ghilardi, Francesco Poli & Giulia Serino | Hands-on Eye Tracking with Python |

| 12:30 – 13:30 | Lunch Break | |

| 13:30 – 15:00 | Tommaso Ghilardi, Francesco Poli & Giulia Serino | Hands-on Eye Tracking with Python |

| 15:00 – 15:30 | Coffee Break | |

| 15:30 – 17:00 | Tommaso Ghilardi, Francesco Poli & Giulia Serino | Hands-on |

| 17:30 – 19:00 | City Tour by bus | Meeting in front of the MPI EVA (bring your swimming suit - not mandatory) |

| 19:30 – 21:30 | Dinner with Speakers | SOLE MIO Seeterrasse - Hafenstraße 23, Markkleeberg |

Thursday, August 8, 2024 – Thermal Imaging

| 09:00 – 11:00 | Rahel Brügger | Basics of Thermal Imaging |

| 11:00 – 11:30 | Coffee Break | |

| 11:30 – 12:30 | Rahel Brügger | Hands-on Basics of Thermal Imaging |

| 12:30 – 13:30 | Lunch Break | |

| 13:30 – 15:00 | Pierre-Etienne Martin | Bio-TIP: Bio-Signal Retrieval from Thermal Imaging Processing |

| 15:00 – 15:30 | Coffee Break | |

| 15:30 – 17:00 | Pierre-Etienne Martin | Hands-on Bio-TIP: Bio-Signal Retrieval from Thermal Imaging Processing |

| 17:00 – 20:00 | Social Gathering on the Roof Terrace | |

Friday, August 9, 2024 – Motion Tracking

| 09:00 – 10:00 | Raphaelle Malassis & Rayanne Martin | Beyond response times: Tracking apes’ hand trajectory on a touchscreen |

| 10:00 – 11:00 | Raphaelle Malassis & Rayanne Martin | Hands-on Beyond response times: Tracking apes’ hand trajectory on a touchscreen |

| 11:00 – 11:30 | Coffee Break | |

| 11:30 – 12:30 | Tim-Joshua Andres & Arja Mentink | Motion-Tracking |

| 12:30 – 13:30 | Lunch Break | |

| 13:30 – 15:00 | Tim-Joshua Andres & Arja Mentink | Hands-on |

| 15:00 – 15:30 | Coffee Break | |

| 15:30 – 17:00 | Charlotte Ann Wiltshire | Hands-on |

| 17:00 – 17:30 | Laura Lewis, Hanna Schleihauf & Pierre-Etienne Martin | Closing of the Workshop |

| 18:30 – 22:00 | Goodbye dinner and social events | |

Saturday, August 10, 2024 – Goodbye

| 8:00 – 11:00 | Breakfast at the hotel and last goodbye |

Accommodation

Participation in the workshop itself is free. We have booked rooms at Jahrhunderthotel in Leipzig, which costs 285 euros for a double room (shared with another participant), or 396 euros for a single room for 6 nights (breakfast included). Applicants are required to cover the costs of their travel and accommodation. If applicants cannot secure funding for travel and accommodation from their institutions, they are welcome to apply for potential funding through the workshop hardship travel fund located at the end of the workshop application.

Application Instruction

The application procedure is performed through Lime Survey.

You will enter:

- basic identification information,

- a motivation text (2500 characters describing why you are interested in participating in this workshop),

- an abstract for your poster presentation (max 2500 character including spaces),

- funding information - for budget organization purpose,

- accommodation and diet preferences,

- additional free text if you wish to share something with us,

- consents for processing your information for the workshop application (mandatory) and for sharing some of your information on the "Participants" section of this website (facultative).

We strongly advise preparing the motivation letter and abstract in advance in order to avoid unintentional disconnection with the survey and data loss.

We also strongly advise participation at the poster session for everyone to encourage discussion.

Application form here.

Application deadline: 30.04.2024

Poster Instruction

Monday, August 5, 2024 from 17:30 to 20:00 in the main hall of the MPI EVA

Posters should be, if possible, A0 format and portrait oriented. Other formats are tolerated. If you design a new poster for our Workshop, feel free to incorporate our logo.

The poster session will consist of two 1-hour sessions + breaks in the main hall in a free interactive format. Participants will be allocated to one of the sessions once on site.

We welcome the re-use of past posters for our event, even if they slightly diverge from our requirements.

Instructions for Hands-on Sessions

This year, we introduce a Workshop GitHub page listing all the necessary instructions to complete the hands-on installation. Please complete the installation steps before the beginning of the workshop.

In addition, an IT help desk will be provided on site.

Speakers

Postdoc - Tech. Dev. Coord.

Department of Comparative Cultural Psychology, Max Planck Institute for Evolutionary Anthropology, Leipzig, Germany

Pierre-Etienne Martin is currently a Postdoctoral Researcher and Tech Development Coordinator at the Max Planck Institute for Evolutionary Anthropology, within the Comparative Cultural Psychology Department. He received his M.S. degree in 2017 from the University of Bordeaux, Pázmány Péter Catholic University, and the Autonomous University of Madrid through the Image Processing and Computer Vision Erasmus Master Program. He obtained his PhD, which was labeled European, from the University of Bordeaux in 2020, focusing on the topic of video detection and classification using convolutional neural networks. He now applies and develops computer vision tools with the aim of better understanding both human and nonhuman animal minds.

Assistant Professor

Utrecht University

Hi, I am Hanna. I study how human children navigate their social and physical worlds. In particular, I am interested in their epistemic practices. I ask questions like: How do they seek information? Do they form and revise their beliefs based on evidence? And, how do they consider this information when interaction with others.

Postdoctoral Fellow

University of California, Berkeley

I am a comparative psychologist and biological anthropologist who is fascinated by the evolution of great ape social cognition. Specifically, my research explores how humans and our closest living phylogenetic relatives, chimpanzees and bonobos, have evolved the cognitive mechanisms used to form, build, and maintain social relationships. I use non-invasive eye-tracking technology and other methods with human children, chimpanzees, and bonobos to uncover the evolutionary pressures and developmental patterns that shape human and nonhuman great apes’ patterns of social attention and curiosity, long-term memory, language comprehension, and emotion understanding.

PhD student

Centre for the Advanced Study of Collective Behaviour, University of Konstanz

I am Alex Chan, PhD student working on machine learning and computer vision methods for animal behaviour, specifically doing 3D posture reconstruction across a range of birds species. Hopefully I can convince you why computer vision is fun and how it can change how we collect data with animals!

Postdoc

University of Cambridge

Francesco completed his PhD at the Donders Institute in Nijmegen, the Netherlands, with a thesis on the mechanisms underlying active learning and curiosity across development, with a special focus on infants. He then moved to Cambridge, where he is currently working on generative network models of brain development.

Postdoc

Utrecht University

After completing my Master’s degree in Biological Anthropology at the University of Zürich (CH), I moved to the UK to pursue a PhD in Behaviour Informatics at the University of Newcastle. During my PhD, I developed and built an in-cage camera module designed to collect long-term video data of pair-housed macaques. I utilized segmentation algorithms to analyse these videos, enabling the identification of individual animals and prediction of their locations. These methodologies not only detect behavioural divergences due to welfare-impacting events but also retain information on individual differences.

Currently, I am a Postdoctoral researcher at Utrecht University in the Netherlands, where I am based at the Biomedical Primate Research Centre (BPRC). My work focuses on implementing computer vision methods to extract valuable information from video data of breeding groups of macaques, aiming to contribute to the improvement of their welfare and management as well as aiding in the data collection for research projects.

PhD student

University of Tübingen

I am a PhD at university of Tübingen, working on the intersection of machine learning and neuroscience. My research is mainly focused on functional transfer methods like knowledge distillation and how they deal with inductive biases and robustness properties.

Senior Scientist

Department of Comparative Cultural Psychology, Max Planck Institute for Evolutionary Anthropology, Leipzig, Germany

I am a research group leader at the Department of Comparative Cultural Psychology (MPI-EVA) and a Postdoctoral Researcher at the Messerli Research Institute (University of Veterinary Medicine Vienna), interested in the evolutionary and developmental origins of basic cognitive abilities.

How do (human and nonhuman) animals solve problems? How do they identify and represent causal relations in their physical and social environment? In my research, I try to answer these questions using non-invasive, behavioral experiments. Currently, I am particularly interested in understanding how great apes and dogs process physical regularities as well as how and when they attribute agency including mental states such as intentions and beliefs. I am also interested in the active learning and information-seeking abilities of nonhuman animals. To address these topics, I use, aside from behavioral experiments, eye-tracking and machine-learning-based 3D tracking technology. I am also involved in the coordination of a large-scale research collaboration in primate cognition research, the ManyPrimates project.

Group Leader

Department of Comparative Cultural Psychology, Max Planck Institute for Evolutionary Anthropology, Leipzig, Germany

I'm a psychologist interested in the developmental foundations of human social learning. In my doctoral research, I studied how preverbal infants attend to social interactions between others, how their own intrinsic motivations shape their attentional orienting, and how they come to use social partners to learn about their environment. During my postdoc, we initiated a comparative project currently investigating how joint attention influences early memory stages of object-related learning across great ape species.

Since 2023, I'm leading a research group at the Department of Comparative Cultural Psychology at the MPI for Evolutionary Anthropology, focusing on observational social learning and visual attention orienting in the first years after birth. The methodological focus of the group is on refining eye-tracking technology for its application with humans and non-human great apes and in remote field settings.

Arja Mentink

Master student

Utrecht University

I am a master student in AI and behavioural ecology at Utrecht university. I am currently working on my master thesis, looking into the possibilities of creating a social network from video data.

Rayanne Martin

Research Assistant

CNRS Aix-Marseille

I am a research assistant specializing in metacognition and social cognition. I use machine learning to track and analyze apes' hand movements during touchscreen experiments to study their decision-making processes.

PhD student

Centre for Brain and Cognitive Development, Birkbeck, University of London

I am a PhD student at the Centre for Brain and Cognitive Development at Birkbeck, University of London. I pursued a BSc in Psychological Sciences from the University of Milan, Bicocca, and an MSc in Cognitive Neuroscience from the University of Padova. During my studies, I joined the Baby & Child Research Centre at the Donders Institute (NL) and the Baby Learning Lab at the University of British Columbia (CA) to deepen my expertise in brain and cognitive development.

Currently, my research focuses on tailoring fNIRS technologies to neurodiverse children and understanding the interaction between memory and attention. Specifically, I am interested in understanding the role of distraction from a developmental perspective and how previous experiences guide the developing child in selecting important information in the environment. To study this, I use a combination of behavioural and neuroimaging techniques.

Senior Researcher

HBEC, MPI-EVA

I use the tools of evolutionary ecology to help understand cumulative cultural dynamics in human societies. Specifically, I look at innovation and strategic retention of ideas and technology in noisy environments, and the demographic aspects of cultural change.

Senior Data Scientist

Noselab

I completed my PhD at the intersection of Computational Neuroscience and Machine Learning, working with Fabian Sinz, where I used deep neural networks, latent variable models, and implicit neural representations, to gain insights into the functional properties of visual sensory neurons. Currently, I am a Senior Data Scientist at Noselab, developing solutions for neurodegenerative diseases.

Postdoc

Department of Comparative Cultural Psychology, Max Planck Institute for Evolutionary Anthropology, Leipzig, Germany

Postdoc

Birkbeck University

I am a Postdoc at the Centre for Brain and Cognitive Development, Birkbeck University. I am fascinated by how young minds learn to predict events and plan interactions with their environment. I am amazed by how these young minds navigate the complexities of their surroundings, sometimes with remarkable success and other times with adorable missteps.

I use a multidisciplinary approach to explore these cognitive milestones, combining behavioural experiments, eye-tracking, brain imaging, and computational modelling. My work is all about unravelling the intricate processes behind children's developing predictive capabilities and their evolving strategies for engaging with the world.

Postdoc

Ecole Normale Supérieure, Paris

I am a cognitive psychologist working with primates. My research revolves around learning: what are the cognitive mechanisms involved in learning sequential regularities? Are there distinct mechanisms underlying the learning of statistical and grammatical regularities? What are their evolutionary and developmental histories? My recent research has delved into the connections between learning, consciousness, and metacognition, specifically investigating whether non-human primates use a combination of implicit and explicit learning processes similar to humans.

To study these questions, I have had the chance to join several primate cognition labs across Europe, including the Primatology Station of Rousset (France), St Andrews University (UK), Max Planck Institute for Evolutionary Anthropology (Germany), and the National Museum of Natural History (France). I specialize in touchscreen-based experiments; and seek to develop more comprehensive behavioral measures that extend beyond accuracy and response times.

PhD Student

University of St Andrews

PhD student at the university of St Andrews studying machine learning and chimpanzee tool use behaviour.

PhD Student

University of Bristol

Postdoc

University of Zurich

I am a behavioural biologist with a special interest for primates (in particular common marmosets) and their understanding of conspecifics and their states. I am passionate about applying thermography methods, all things R and data viz! Currently I am working as a PostDoc in the Evolutionary Cognition Group at the University of Zurich.

Participants

Jack Terwilliger

PhD student

University of California, San Diego

Abstract: Everyday life frequently involves navigating crowded public spaces, whether it be perusing the stalls at a market, catching the bus to work, or walking around a festival. Successful navigation is a situated process of efficiently toward one’s goals without getting lost or colliding with anything. Often navigation is framed as requiring physical or spatial cognition. But it involves reasoning about social norms too. As accountable members of societies we must also abide by and negotiate the tacit proxemic norms which regulate the social use of space. For example, we tend to respect each other’s personal space, which people treat as “an invisible boundary around their body into which other people may not come”. However, nearly all research on proxemics has focused on the norms governing the space between a subject and other’s personal space, but a pervasive type of proxemic navigation problem arises from the space between people and between people and things. Here we consider how passersby navigate between or around dyadic interactions. We conducted a series of field experiments in which we placed a research actor at heavily trafficked locations at UC San Diego and observed when others were likely to walk between them and the person/thing they were interacting with. We manipulated whether they were holding a conversation, reading a sign, or looking at a mural. Additionally, we manipulated idioms of interactional involvement such as interpersonal distance, body orientation, gaze, and talk as well as actor gender. First, we show how passersby infer the presence of and right of way of third party interactions inferred from these idioms. Second, we show that passersby take into account others’ goals (conversation is given priority over sign reading and sign reading is given priority over art appreciation). Third, we show that female actors were more likely to be interrupted. However, male and female passersby did not interrupt others at different rates nor did they aim themselves around the actor at different distances. Fourth, from pedestrian trajectories, we measure the metabolic cost of abiding by proxemic norms.

Louise Mackie

PhD student

Messerli Research Institute, University of Veterinary Medicine Vienna

Abstract: Human children as young as 24-months old can imitate skills that they observe someone perform on video (Strouse and Troseth, 2008). TV programmes for young children actively encourage this for educational purposes, such as Dora the Explorer. However, although young children are capable of learning from videos there is a deficit in the quality of their learning when compared to face-to-face demonstrations (Strouse and Samson, 2020).

Not only humans can watch and learn from watching TV. Hopper et al. (2012) found that chimpanzees could learn from watching videos in a social learning context. Similar to children, the chimpanzees showed a bias to better copy a live demonstrator rather than a video one, by matching the direction in which the demonstrator opened a door on their first trial. Over time though, the videos became as equally efficient as live demonstrations. Live conspecific models elicit the most successful social learning (Hopper et al., 2015), but they are difficult to train and control for consistent demonstrations. Therefore, conspecific video demonstrations can offer a reliable alternative for investigating non-human social learning.

The current collaborative project utilizes conspecific video demonstrations and eye-tracking technology to investigate chimpanzee imitation. We provided chimpanzees with 18 video clips of a two-action task (alternating between a full-view conspecific demonstration and a close-up action demonstration) before they attempted the task for themselves. They were provided with four task attempts total (video demonstrations + task trials). Performance will be compared to human children using the same methods and apparatus. We aim to compare looking patterns and anticipatory gazing at apparatus components between the chimpanzees and children during the video demonstrations. We also ask whether there are differences between solvers and non-solvers, copiers and non-copiers of the two-action task.

I would also like to propose a related project idea using these methods to investigate social learning in domestic dogs. Dogs have been shown to match human methods to reach goals (Kubinya et al., 2003), to copy humans after a delay (Fugazza and Miklosi, 2014), and to copy irrelevant actions (Huber et al., 2018) – making dogs an interesting species to test the effects of video demonstrations in terms of eye tracking technology.

Fay Clark

Lecturer

University of Bristol

Bio: I am a UK-based academic interested in the comparative mental processes and mental health of animals. I look for connections between animal cognition, behaviour, environment and affective state. I have interdisciplinary training in zoology, biological anthropology and psychology. At the University of Bristol, I run the Comparative Challenge Lab and my work can best be described as experimental psychology with a comparative emphasis; I develop cognitive task apparatuses to test cognitive skills, and/or provide 'enrichment' to impaired environments. There is also a human twist; even though I specialise in the study of nonhuman animals I draw heavily from human studies of learning, motivation and response to challenge. My research takes place on wild animals in their natural habitats, and housed in zoos (including traditional zoos, aquariums, safari parks and sanctuaries) or research centres. To date, I have focussed on 'High EQ' taxa, such as primates and cetaceans due to my background in dolphin cognition and biological anthropology. My current area of focus is whether 'flow state' exists in nonhuman animals but I am also interested in game psychology more generally and how technology can be used to eavesdrop on animals using cognitive tasks.

Emma McEwen

PostDoc

University of St Andrews

Abstract: Computerised technology is an increasingly popular tool for cognitive testing with non-human animals. Technology in cognitive research has numerous benefits, such as tighter control over stimuli presentation and recording responses, as well as for public engagement and science communication. Recently, virtual environment (VE) software has been successfully implemented in cognition research with non-human primates. In VEs, novel stimuli can be presented in innovative ways, opening the door to studying aspects of cognition in new task contexts, and studying phenomena in ways which may not be possible when restricted only to real-world space. We present evidence from capuchin monkeys (Sapajus apella) in a computerised virtual foraging task presented on a touchscreen. Capuchins learnt to move an avatar, viewed from a first-person perspective, around a virtual arena to collect virtual fruit. The capuchins engaged in progressively more challenging stages of the task and were able to turn their avatar to collect out of sight fruit, avoid obstacles, and move their avatar across increasing distances to collect fruit further away. Overall, capuchins were successful in learning the skills required for VE tasks. Future work within this virtual world will investigate capuchin’s performance in a virtual short-term memory task and assess the equivalence of their performance in a VE to that in real-world, physical memory tasks. We show here that VE touchscreen tasks are a feasible method for studying cognition with capuchin monkeys, offering the possibility to study primate cognition in novel and engaging ways without the physical constraints that are often present when designing apparatuses.

Oded Ritov

PhD Candidate

University of California, Berkeley

Abstract: Friendship and Chimpanzee’s Emotional Responses to TheftWhile the agent-neutral set of expectations referred to as “morality” is thought to be unique to humans, chimpanzees, our closest living relatives, form complex dyadic expectations from their cooperative partners (Engelmann & Tomasello, 2017). Adult chimpanzees have about 3 close friends, with whom they share as much as 60-80% of their grooming time (Muller & Mitani, 2005). They trust these friends significantly more frequently than non-friends (Engelmann & Herrmann, 2016), and are likelier to choose to help them (Engelmann et al., 2019). What kind of expectations do chimpanzees have from their friends? And do they respond with anger to violations of these expectations?According to the recalibrational theory of anger, anger responds to signals that the offending agent has placed too little value on the welfare of the angered individual (Sell et al., 2017). Through displaying anger, the victim communicates the expectation that more weight be placed on their welfare in the future. According to the recalibrational theory of anger, we expect more from our friends, and are more angered when they make decisions that place little value on our welfare than we are when nonfriends do so (Delton & Robertson, 2016) – suggesting that chimpanzees might also be quicker to anger when a friend affronts them. Chimpanzees have previously been found to avenge theft and respond to it with anger (Jensen et al., 2007), making food theft an ideal context to investigate anger at the violation of social expectations.The present study is an investigation of whether friendship affects chimpanzees’ responses to theft. Using a simple tray-pulling paradigm, we allowed chimpanzees to steal pieces of pineapple from a groupmate. We matched each “victim” subject with one friend and one neutral peer. The data for this study has been collected on Ngamba Island Chimpanzee Sanctuary and is currently being coded. We will look how different vocalizations (whimpers, cries, and screams) and other expressive behaviors (such as banging and self-scratching) differ between the friend and nonfriend condition. Furthermore, we will attempt to validate 3D motion tracking as a tool for measuring chimpanzees' emotional reactions. The results will deepen our understanding of the social expectations chimpanzees form with close relationship partners and the role of anger in regulating social behavior across phylogeny.

Juliana Wallner Werneck Mendes

PhD student

University of Veterinary Medicine of Vienna

Abstract: Human-induced environmental changes present wild animals with new challenges and new opportunities. Therefore, evaluating risk associated with anthropogenic objects is an important behavioral aspect to navigate environments with any degree of human influence. Moreover, understanding species-specific risk-taking tendencies is important for monitoring and conservation of wildlife and may help mediate human-animal conflict. The effect of habituation is particularly important, since management tools might lose effect if not associated with actual risk. Differences in neophobia responses are expected according to species’ socio-ecology. For example, it has been shown that social animals are more likely to be less neophobic when in groups than when alone. The non-excluding adaptative flexibility hypothesis suggests that generalist foragers benefit more from being less neophobic. Data to support (or not) these hypotheses are lacking in wild mammals. This study aims at expanding the scope of species studied in neophobia responses. We will investigate the effects of species and presence of conspecifics in animals’ reaction to a carcass associated to novel objects in African mammals. The study took place in Mawana Game Reserve in Kwa-Zulu Natal, South Africa, which is home to seven carnivores: genets, servals, black-backed jackals, leopards, caracals, spotted hyenas and brown hyenas. Moreover, other mammal species such as bush-pigs, warthogs and giraffes have been observed feeding on carcasses. To account for the effect of habituation, we presented four locations with accessible meat and had three conditions: no novel object, novel object 1, and novel object 2. Videos were captured with two camera traps per location, and we will analyze the species and number of individuals observed in each condition. We hypothesize that: 1) all species will be more likely to feed from the carcass in the absence of the novel object than when it is present; 2) there will be a habituation effect and species will be more likely to eat from the carcass in the condition “novel object 2” than in “novel object 1”; 3) social animals will be more likely to approach the carcass with the novel object when in the presence of conspecifics; 4) generalist species will be more likely to feed from the carcass in the presence of the novel object than specialist species.

Camilla Cenni

PostDoc

University of Mannheim

Abstract: From monkeying around to tooling around? How object play shapes the emergence of tool use in long-tailed macaques.

Theoretically, object play is thought to facilitate the acquisition and expression of tool use; empirically, evidence for this claim is limited. We tested whether a cultural form of object play called “stone handling” facilitates the acquisition and expression of stone-tool use in a free-ranging group of Balinese long-tailed macaques.

Through field experiments, we tested whether individuals’ stone-play profiles predicted their ability to solve foraging tasks using stones as tools. Frequentist network-based diffusion analysis, Bayesian multilevel regression modelling, and description of individuals’ learning trajectories suggest that stone-tool-assisted foraging depended on various social and asocial learning strategies. Certain stone handling profiles, as well as other trait- and state-dependent variables, contributed to explaining the variance observed in stone-tool use in this population. This is the first experimental evaluation of the role of stone-directed play in the expression of stone-tool use, shedding lights on the potential ecological and evolutionary implications of lithic technology in early humans.

James Brooks

PostDoc

Kyoto University Institute for Advanced Study

Abstract: The hormone neuropeptide oxytocin has become a central topic in mammalian social evolution for its importance in behaviours such as social gaze, ingroup-outgroup psychology, and bond formation. We here employed eye-tracking with exogenous oxytocin in order to study social cognitive differences in our two closest relatives, bonobos and chimpanzees, who are known to differ from one another in several behaviour previously linked to oxytocin. In two studies, we found that oxytocin acts differently in modulating bonobos’ and chimpanzees’ social gaze. First, it enhanced and accentuated species-typical face-scanning patterns (greater eye contact in bonobos, mouth looking in chimpanzees), and second, it promoted outgroup attention in species-relevant ways (specifically, greater attention to outgroup members of the sex primarily involved in intergroup encounters). These results together reiterate the importance of oxytocin in bonobo and chimpanzee sociality as demonstrated by field studies, and suggest oxytocin may play a key role in the evolution and divergence of Pan sociality. While oxytocin changed the pattern of attention differently in the two species, it generally interacted with existing socio-attentional biases similarly in the two species, in that it promoted attention to the face regions already most salient to each species and promoted outgroup attention to certain sexes relevant for intergroup behaviour. This suggests common general roles of oxytocin through human and non-human primate evolution, with differing species-relevant instantiations based on the species social and evolutionary background.

Luz Carvajal Villalobos

PhD student

Johns Hopkins University

Abstract: Previous research suggests that theory of mind capacities are supported by executive function in children. Inhibitory control and working memory have been proposed to contribute to false belief understanding (Carlson, Moses & Breton, 2002), and the depletion of executive function in children hinders their performance in false belief tasks (Powell & Carey, 2017). Carlson, Claxton and Moses (2015) propose that executive function is key to the emergence of theory of mind in children, not just its expression. They claim that understanding the possibility of perspectives different from one’s own relies on executive function as it allows children to detach themselves from what is immediately visible. The present study investigates the relationship between perspective-taking (measured through geometric gaze following), executive function (measured through working memory updating) and causal reasoning (measured through a physical cognition task) in chimpanzees. To date, this relationship has not been tested in humans’ closest relatives, the great apes. Access to research-naive populations is restricted by the limited number of sites with established infrastructure and populations, due to the demanding nature of housing and caring for apes. The chimpanzees at the Maryland Zoo in Baltimore (MZiB) present a valuable opportunity to study this relationship in subjects who are at the very beginning of their path as cognitive research participants. We tested 8 chimpanzees, 7 adults and one infant and will use mixed-effects modeling to test the relationship between our variables of interest. Our primary hypothesis is that perspective-taking in chimpanzees depends selectively on executive function and, therefore, that individual differences in executive function performance (but not causal reasoning performance) will predict perspective-taking performance. An alternative account holds that perspective-taking depends on causal reasoning, given that perspective-taking involves some causal reasoning about mental states, and thus predicts that individual differences in causal reasoning performance (but not executive function performance) will predict perspective-taking performance. These findings will deepen our understanding of the relationship between executive function and performance in cognitive tests in general, and its role in perspective-taking in chimpanzees.

Catia Correia-Caeiro

Senior Researcher / Guest Researcher

Human Biology & Primate Cognition (Leipzig University) / CCP MPI-EVA

Abstract: Despite its growing interest, the perception of emotion cues is still an under-researched cognitive process. The dog has shared the same social environment as humans for more than 10,000 years, and dogs have some understanding of human facial expressions. However, we still do not fully understand the mechanisms behind it. Hence, two studies were undertaken to investigate the perceptual mechanisms of emotion cues, using an inter-specific model (humans and dogs). Study 1 investigated the relative importance of facial vs bodily cues perception throughout the life span. Study 2, investigated gaze patterns on facial. An eye-tracker recorded eye movements of human and dog participants. In Study 1, we successfully sampled 129 humans and 92 family dogs of various breeds. In Study 2, we successfully sampled 26 humans and 27 family dogs of various breeds. Participants freely viewed 20 short videos of other humans’ and dogs’ displaying visual cues of emotion. The video stimuli displayed naturalistic emotion cues for fear, frustration, happiness, positive anticipation or no emotion in both dogs and humans. In Study 1, full body cues were visible for both videos of humans and dogs, whilst in Study 2, only the face was visible for both species. All video stimuli were controlled for facial movements display with the Facial Action Coding System for humans and dogs (DogFACS). Eye movements were analysed with Areas of Interest (AOIs) and corresponding viewing time. GLMM models with AIC and ANOVAS were used for statistical analyses. In Study 1, dogs attended more to the body than the head of human and dog figures, unlike humans who focused more on the head of both species. Hence, bodies may be a more important source of emotion cues for dogs. Dogs and humans also showed a clear age effect that reduced head gaze. These results may reflect cognitive age-dependant changes or increased experience with emotion cues and inter-species interactions. In Study 2, it was demonstrated that humans and dogs observe facial expressions of emotion by employing different gaze strategies and attending to different areas of the face. Although there might be an ancient mammalian homology in facial musculature and in neural systems for emotion between humans and dogs, this work shows that perception of emotion cues varies widely in these two species.

References: Correia-Caeiro et al (2021) Animal Cognition, 24(2), 267-279. Correia-Caeiro et al (2020). Animal cognition, 23(3), 465-476.

Nicki Guisneuf

PhD student

University of Michigan

Abstract: How does habitat fragmentation effect home range, vocal behaviour, and group cohesion in wild white-faced capuchins?

Home range site fidelity is important for advantageous ecological knowledge, though home range shifts can be beneficial for optimising foraging across seasons. Habitat fragmentation can disrupt these shifts, limiting ability to travel and access to food, but also potentially impacting social behaviour and group dynamics. To investigate the effects of fragmentation on ranging patterns, vocal behaviour, and group cohesion, we compared two neighbouring groups of capuchin monkeys (Mesas and Tenori) at the Taboga Forest Reserve, in Guanacaste, Costa Rica. These groups experience different levels of fragmentation (M: continuous, T: fragmented).

We collected daily range data using a handheld GPS during full day follows for 2022 and 2023. We performed kernel density analyses using ArcGIS Pro (contour density = 2000 points per km squared) to find core range in each season (dry: November -April; wet: May-October), plotted twitter vocalisations, and assessed group cohesiveness. We also conducted transects with a drone, collecting photos from three locations across the ranges of these two groups, to assess how the habitats change seasonally and differently depending on proximity to water sources like farm irrigation.

Mesas had a larger range overall (M: 1.4km2, T: 0.8km2). Seasonally, monkeys were more cohesive in the wet season than the dry season (GLMM: SE = 0.03297, z = 2.78, p = 0.005), but more vocal in dry season. Mesas had a larger core range in dry season (0.6km2 dry, 0.4km2 wet), whereas Tenori had the opposite: larger in wet season (0.3km2 dry, 0.5km2 wet). Mesas were more cohesive than Tenori, having more individuals within 5m (GLMM: SE = 0.18, z = -2.82, p = 0.004), and more social vocalisations (rate M: 4.54, T: 3.35 per observation hour), Tenori having even fewer in the wet season (1.99). Fragmentation therefore likely constrains home range overall, but also reduces communication and spacing, though further work is needed to fully understand how social dynamics beyond vocal behaviour and group spacing, are affected. Mapping technology and drone photographs are important methods that allow us to obtain a bigger picture view of primate behaviour across multiple years.

Rachel Robinson

PhD student

Nottingham Trent University

Abstract: Cultural difference and convergence of facial movements in bonobos (Pan paniscus)

Authors: Rachel Robinson, Prof Bridget Waller, Dr Clare Kimock, Dr Jamie Whitehouse

Variation in primate facial movements have been of interest to researchers for several decades, but without means to measure or quantify these variations. We can now quantify subtle differences in facial movements using the Facial Action Coding System (FACS), a reliable and objective tool, based on underlying facial musculature, with variations for other primate species (e.g. ChimpFACS). The variations that we observe within species may be shaped over time through social interaction, with individuals in a social group using similar facial movements. If convergence of facial movements enhances social bonding, then it could be strategic to adapt facial movements based on group membership. To investigate cultural differences and convergence of both the production and perception of facial movements, I will conduct two studies with the bonobos housed at Twycross Zoo, UK.

Study 1: An observational study to explore group differences in production of facial movements. The bonobos at Twycross Zoo are housed in fluid social groups with frequently changing group compositions (a door between two adjacent groups is on occasion opened to simulate fission-fusion and migration between groups). This provides an excellent opportunity to explore how communicative signals are shaped by social environment, which in these groups, is constantly changing.

Study 2: An experimental study to investigate group differences in perceptions of facial movements. I will present individuals with facial movement stimuli and investigate subjects’ attention to these facial movements using eye tracking equipment. I predict that there will be group differences in how individuals process and explore facial movements, with individuals attending to areas of the face which are more commonly used during communication within their specific group (e.g., more brow movement production in a group, may lead to higher attention towards the brow area during perception). Data of facial movements produced by individuals in each social group collected from Study 1 will inform the predictions of Study 2.

I will present preliminary data from Study 1. Data collection for Study 2 starts in September, and I would welcome feedback on the design and planned analyses. Funding for this project has been provided by the Primate Society of GB.

Chiara Zulberti

PhD student

University Leipzig

Abstract: ChimpLASG: a form-based approach to the classification of chimpanzee gestures.

The conventional method for categorizing primate gestures relies on top-down approaches, wherein gestures are assigned predetermined categories based on researchers' discernment. However, this approach often lacks consistency in the applied criteria. For instance, while the gesture "poke" is characterized by a stretched fingers handshape, similar movements may all be categorized as "touch," regardless of the body part involved. Despite these limitations being acknowledged, there is to date no formal coding scheme for primate gestures that does not rely on predefined categories. This study aims to address this gap by introducing an annotation system that primarily describes chimpanzee gestures through formal parameters before assigning them to gesture types. Drawing inspiration from a linguistic annotation system for human co-speech gestures known as LASG (Bressem et al., 2013), we adapted it for chimpanzee gestures. The resulting coding scheme, ChimpLASG, comprises 13 formal parameters. It begins by subdividing gestures into phases, focusing on the smallest unit, the "stroke." Each stroke is then described in terms of hand-wrist-elbow configuration, orientation, and position, as well as movement characteristics like descriptor, direction, trajectory, quality, and motor pattern, along with touch details such as quality, body part involved in touching, and body part being touched. Currently, ChimpLASG is fully developed and undergoing its initial application for video coding in ELAN, using a dataset of semi-wild chimpanzees in Chimfunshi, Zambia. By employing form-based approaches like ChimpLASG, we can mitigate existing biases in primate gesture classifications, facilitating further exploration into gestural units, compositionality, and the correlation of gesture formal parameters with demographic, social, and ecological factors.

Authors: Chiara Zulberti1, Federica Amici1,2, Katja Liebal1,2, Jana Bressem3, Silva Ladewig4, Linda Oña1,5

Affiliations: 1Faculty of Life Sciences, University Leipzig, Germany; 2Max Planck Institute for Evolutionary Anthropology, Leipzig, Germany; 3Technische Universität Chemnitz, Germany; 4Georg-August-Universität Göttingen, Germany; 5Max Planck Institute for Human Development, Berlin, Germany

Ana Tomašić

PhD student

University of Veterinary Medicine, Vienna

Abstract: VIOLATED EXPECTATIONS STIMULATE CURIOSITY IN DOGS

Christoph J. Völter 1,2, Ana Tomašić 1, Laura Nipperdey 3, Ludwig Huber 1

1 Messerli Research Institute, University of Veterinary Medicine Vienna, University of Vienna and Medical University of Vienna, Austria

2 Department of Comparative Cultural Psychology, Max Planck Institute for Evolutionary Anthropology, Germany

3 Faculty of Veterinary Medicine, University of Leipzig, Germany

Previous research on human infants shows that violations of basic physical rules cause longer looking times and increase exploration, serving as a form of hypothesis testing for acquiring knowledge. Our study aimed to investigate if a connection between expectancy violation and exploration also exists in nonhuman animals. Specifically, we focused on pet dogs and their reactions to expectancy violations in occlusion events. We carried out three experiments: Experiment 1 (N=14) and Experiment 2 (N=17) were between-subject design eye tracking studies, while Experiment 3 (N=63) used a within-subject design in which dogs observed manipulation of an object on a stage. In Experiments 1 and 2, dogs watched two separate, short videos on a screen. During the disappearing condition, physical principles of occlusion were violated as the ball disappeared when passing between the two occluders. The physical laws were not broken during the reappearing condition, which was used to control for the novelty of the stimulus. In Experiment 3, dogs observed a real-life demonstration of the ball either disappearing, or reappearing between the two occluders. Observation was followed by an exploration phase in which the dog could choose to interact with the target object or two distractors. Dogs exhibited dilated pupils and prolonged gaze durations in response to improbable occlusion events, when compared to reappearing events. They also explored target objects for a longer period of time after expectancy-violating disappearance events, possibly driven by surprise and novelty. This suggests that, similarly to human infants, expectancy violations can present learning opportunities for pet dogs.

Kata Horváth

Self-employed postdoctoral researcher with the Department of Philosophy, Lund University

Abstract: The use of fire represents one of the most significant innovations in the history of humanity, having profoundly influenced the course of human evolution. Modern humans derive a sense of relaxation from watching fire, so it may be possible that early hominins experienced something similar, which helped to facilitate interactions with fire. Yet the precise way this developed, including the neurocognitive changes that may have been required, remains largely unknown. In this pilot study, we employed a fully controlled within-subject design to investigate the electrophysiological correlates of fire watching using electroencephalography (EEG) and heart rate variability (HRV). Six healthy young adults participated in a three-session experiment. Each session comprised the screening of a 20-minute audio-visual recording of either a bonfire, a waterfall (another natural stimulus associated with relaxation), or a repetitive, geometric animation with white noise in the background (an arbitrary stimulus likewise considered relaxing). EEG and HRV were recorded throughout. The EEG results revealed that frontal theta activity was more prominent in the bonfire condition compared with the other two. This finding aligns with previous studies on meditative states, focused attention, and mind wandering. Frontal alpha activity, also linked to meditative states, mind wandering, and unfocused wakefulness, also showed an increase selectively in the bonfire condition. Frontal beta activity, which is most often linked to focused attention and cognitive task solving, did not differ significantly between conditions. Notably, HRV exhibited no condition- or time-related effect, suggesting that cardiovascular system may be less sensitive to relaxing stimuli. Overall, these preliminary results provide the first electrophysiological evidence that watching fire, but not other relaxing scenes, induces a neurocognitive state akin to meditation and mind wandering.

Coralie Galvagnon

PhD student

Institute of Genetics and Animal Biotechnology of the Polish Academy of Sciences

Abstract: Identifying methodologies to assess positive animal welfare in farm animals

Coralie Galvagnon1, Linda Keeling2, Laura Webb3, Irene Camerlink1

1 Institute of Genetics and Animal Biotechnology, Polish Academy of Sciences, Postepu 36, 05-552 Jastrzebiec, Poland

2 Swedish University of Agricultural Sciences, Box 7068, 750 07 Uppsala, Sweden

3 Wageningen University , POB 338, 6700 AH Wageningen, Netherlands

COST Action ‘LIFT: Lifting farm animal lives – laying the foundations for positive animal welfare’ is a 4-year EU-funded research network. The network aims to provide the background for including positive welfare in farm animal welfare assessment schemes. The traditional approach within animal welfare was to prevent animal suffering. Consequently, there is a large bias in the science of animal welfare towards the study of negative experiences. This is still important, but recent scientific advances also consider animals’ positive experiences, in order to gain insight in how animals can be given the opportunity for positive animal welfare. LIFT started in November 2022 and will continue to November 2026. Currently, the network has more than 300 participants from over 43 different countries. One work package is dedicated to identifying valid methodologies for assessing positive animal welfare. The work package consists of researchers from various disciplines, including animal sciences, neurosciences and social sciences. One of its tasks is to report on successful and unsuccessful test paradigms that have been carried out in the past. The working group will assess the identified methods for their suitability to assess positive welfare on farm animals. Currently, there are sub working groups on, amongst others, indicators of cumulative welfare, choice and agency, low arousal positive states, affect balance, circadian rhythms, and play behaviour. There are numerous challenges when assessing subjective experiences in animals. Technologies developed originally for humans are an increasing source of inspiration and one of the areas which is being explored within this network.

Jonathan Webb

Research Assistant, self-employed with Lund University

Abstract: Yellowstone Ravens: Taking a Computational Approach to Studying Fire Behaviour (Jonathan Webb, Dr Ivo Jacobs, Prof Matthias-Claudio Loretto).

Complex fire-use is often used as an example of unique human cognitive achievement, however many species regularly encounter fire and have evolved behavioural adaptations to interact with it. One of the most sophisticated cases may be of pyrocarnivory, where predators stalk wildfires to catch prey that has been flushed out, disoriented, fatigued, and/or injured by fire. This has been observed in several raptor species in North America and Australia, but our preliminary data suggests that other families, such as Corvids, may also hunt using wildfires. Systematic study into pyrocarnivory has so far remained limited, as previous studies have relied on first-hand field observations. Identifying larger-scale patterns using this approach would be logistically difficult, expensive, and time-inefficient.

This study pilots a novel computational approach for analysing spatiotemporal patterns in fire-related behaviour. Using publicly available datasets on wildfire occurrence (NASA remote-sensing systems) and animal movements (GPS data from the Movebank database), this study is examining the relationship between fire events and the movements of 60 GPS-tagged ravens (Corvus corax) in and around Yellowstone National Park, US, across three years. Raven movements, such as the propensity to move towards or away from wildfires, as well as the effect of environmental and ecological factors, will be investigated. As work on this project is still ongoing, methods and preliminary results will be presented.

With vast amounts of animal tracking and satellite data available online, this approach could pave the way for remote, low-cost research that could reveal fire associations in new populations. Wildfire regimes around the world are becoming increasingly sporadic due to human-driven factors, and are responsible for the decline of a large proportion of threatened species. Understanding how and why animals associate with wildfires, and incorporating this into biodiversity conservation strategies, will be indispensable in protecting populations now and into the future.

Luke Townrow

PhD student

Johns Hopkins University

Abstract: Numerous uniquely human phenomena, ranging from teaching to our most complex forms of cooperation, depend on our ability to tailor our communication to the knowledge and ignorance states of our social partners. Despite four decades of research into the “theory of mind” capacities of nonhuman primates, there remains no evidence that primates can communicate on the basis of their mental state attributions, to enable feats of coordination. Moreover, a recent re-evaluation of the experimental literature has questioned whether primates can represent others’ ignorance at all. The present pre-registered study investigated whether bonobos are capable of attributing knowledge or ignorance about the location of a hidden food reward to a cooperative human cooperative partner, and utilizing this attribution to modify their communicative behavior in the service of coordination. Bonobos could receive a reward that they’d watched being hidden under one of several cups, if their human partner could locate the reward. If bonobos can represent a partner's ignorance and are motivated to communicate based on this mental state attribution, they should point more frequently, and more quickly, to the hidden food’s location when their partner is ignorant about that location than when he is knowledgeable. We found evidence demonstrating that bonobos indeed flexibly adapt their rates of communication through imperative pointing according to their partner’s mental state. These findings suggest that apes can represent (and act on) others’ ignorance in some form, strategically and appropriately communicating to effectively coordinate with an ignorant partner and change his behavior.

Juan Ignacio Nachon

PhD student

Unit of Applied Neurobiology (UNA, CEMIC-CONICET) Buenos Aires, Argentina

Abstract: Rationale

Systematic observations of children in naturalistic settings are still rare, particularly when compared to more ubiquitous forms of behavioral assessment (e.g., controlled laboratory tasks and indirect caregiver reports), but these approaches could complement traditional methods, improving overall ecological validity. Direct observation might help developmental scientists to better grasp how relational processes unfold in children’s everyday lives. Current leaps in computer vision and pattern recognition technology hold the prospect of automating the assessment of phenomena that have largely been studied indirectly, in vitro, or at great expense for researchers. The exponential growth of massive behavioral data repositories would certainly ensue from coordinated efforts made by international partners. Which opportunities and potential pitfalls should scientists, decision makers and other stakeholders be aware of?

Sample

127 primarily neurotypical child-caregiver dyads (~50% female; age range: 4-9 yrs) were filmed in a semi-structured play session during a visit to a local science museum (activities: rock-paper-scissors and origami folds). Afterwards, caregivers reported on the family's SES, child’s health history and temperament, while the child was assessed with a computerized battery of EF tasks and two subtests from the WJ-III.

Methods

Audiovisual records are being coded manually using BORIS for facial expressions, gaze and verbal behavior. Simultaneously, head-pose and facial action units are being estimated with pretrained open-source models included in the py-feat toolbox, and verbal expressions are being diarized and transcribed with a pyannote-whisper pipeline.

Hypotheses

Children that exhibit fewer bouts of fussiness; spend a larger proportion of time engaged with activity-relevant targets; and show greater levels of affective and attentional synchrony with their caregivers will have more effective performances in EF and academic achievement tasks, even when controlling for individual and context level covariates.

Inter-observer reliability estimates between manual coders and model inference outputs will be low to moderate.

Discussion

Potential sources of bias in this paradigm.

The case for open-source, ad-hoc implementation of ML models in developmental research.

Technoethical challenges: participant biometric data security and integrity.

Akshaye Bhambore

PhD student

University of Oulu

Abstract: Understanding the cognitive abilities of non-human organisms has long been a subject of scientific interest. Insight is a cognitive process defined as the ability to solve complex problems using novel solutions, without trial-and-error or associative learning. In this study, we investigated whether Bumblebees (Bombus terrestris) can solve problems spontaneously. Bees are known for their remarkable learning abilities, particularly when it comes to foraging and recognizing specific cues. We sought to determine whether bees could adapt their learned behaviours to solve complex problems in a novel context.

In our study, four groups of bees underwent distinct training protocols and were subsequently tested in a new, modified task. The first group was trained to associate a blue ring on the arena floor with a reward, along with manipulating a ball covering the blue ring. In the novel context, the blue ring was dislocated to the arena’s roof, requiring the bees to move the ball underneath it and climb onto the ball to reach the ring. The second group received similar training but encountered a green dislocated ring in the novel context, while the third group was exclusively trained to associate the blue ring on the floor with a reward, lacking prior information about the ball. To understand the role of perceptual feedback, the individuals in the fourth group were required to move the ball through a hole to solve a visually restricted task.

Our findings revealed that the first group of bees successfully solved the task in the novel setting. In contrast, the second group exhibited significantly lower success rates, and the third group had only one out of twenty-one bees accomplishing the task. The first group of individuals were more likely to attempt to obtain the reward, even among those who didn’t solve the task. Seven out of twenty bees could solve the visually restricted task in the fourth group, indicating that perceptual feedback is important but not necessary to solve the task.

In conclusion, this study provides compelling evidence that bumblebees are capable of insightful problem-solving, as demonstrated by the first group’s success in adapting to a novel context. The differing performance across groups highlights the importance of prior training in problem-solving. The findings also underscore the potential for bumblebees to exhibit different cognitive strategies, showcasing the complexity of their learning and problem-solving abilities.

Csilla Paraicz

Research Assistant, soon a PhD student

Nottingham Trent University

Abstract: Human communication is multimodal, yet research investigating the origins of human language is primarily focused on communicative modalities (e.g. vocalisations, facial expressions) in independence. Using a multimodal approach is essential to understand how human language has evolved from ancestral systems common to extant primate species. Multimodal communication in primates has been suggested to offer advantages compared to unimodal signaling, by speeding up information processing, increasing clarity, and lessening the risk of misunderstandings in high-stakes situations or social contexts (resulting in aggression), but empirical data is sparse. According to the Social Complexity Hypothesis (Freeberg et al., 2012), more socially complex species should demonstrate more complex communication systems to better navigate their social landscapes. Therefore, multimodal communication could be functionally related to aspects of social complexity, such as social tolerance. In this PhD I will examine multimodal communication in two primate species who exhibit socially tolerant social systems (Pan paniscus [bonobos] and Macaca nigra [crested macaques]) and compare them to species with much less tolerant social systems (Macaca mulatta [rhesus macaques] and Pan troglodytes [chimpanzees] respectively). Through analysis of valuable existing video datasets of rhesus macaques and crested macaques and collecting new observations of chimpanzees and bonobos at Twycross Zoo, I will examine the rate of multimodal signals produced and the contexts in which they are used in to test the Social Complexity Hypothesis. Finally, through cognitive experiments using recently developed eye-tracking facilities at Twycross Zoo, I will test how bonobos and chimpanzees process multimodal signals compared to unimodal signals.

Danielle Wood

Master's student

MPI Eva

Abstract: Are apes curious? Do they seek explanations? As part of my master's thesis, I will present a study conducted to explore if great apes seek an explanation when presented with an event inconsistent with their prior knowledge. Specifically, we explore whether apes exhibit curiosity by seeking a “why” in case of an impossible event, excluding food expectancy as a motivational driver. To test this, the experimenter placed two boxes - both with a stick situated in the middle - on a table in the middle of the testing booth. The participant entered and was encouraged to sit in the middle of the booth behind mesh (such that they were sitting opposite of the experimenter). The experimenter then placed a reward in front of both sticks and highlighted on one side, a normal stick that had no gap - the expected condition, and on the other side the presence of a gap (such that there were two stick pieces and a gap in the middle). The latter condition was to resemble a "non-functioning" stick, but the two pieces were connected underneath the box, enabling them to move forward simultaneously - the unexpected condition. After this, the two conditions were presented sequentially - the stick was pushed forward, causing the reward to descend through a hole in the front of the box, towards the participant. After both rewards were acquired, the experimenter pushed both boxes to either side of the booth and left the room for two minutes. During this time, the apes were able to choose which box (if any) they explored - either by visual inspection or object manipulation. After two minutes were over, the experimenter returned to the room and the trial was over. The variables measured and analyzed were proportion of time inspecting the boxes (unexpected time/(unexpected + expected), manipulating the boxes (same proportion) and which side they approached first (unexpected or expected). At the moment results are not available but will be available upon poster presentation.

Larissa Kahler

PhD student

Uniklinik RWTH Aachen

Abstract: Using sign language on a regular basis shapes and changes visual perception. These effects range from a larger peripheral field of view in signers compared to non-signers (Stoll et al., 2018) to differences in face perception, which suggest that hearing and deaf signers can discriminate face identities better than hearing non-signers (Bettger et al., 1997). However, little is known about the way the use of sign language may affect the perception of complex scenes. In the present study we want to assess the effects of sign language on eye gaze behavior towards complex scenes including faces and hands. Given the importance of these stimuli for communication in sign language, we expect signers to focus more on faces and hands while viewing complex scenes than non-signers.

To this end we are currently acquiring eye-tracking data in a group of adult signers (hearing or deaf) and a group of adult hearing non-signers. During the eye-tracking task, participants are asked to freely watch 100 complex scenes (Xu et al., 2014), presented for 3 seconds each, while their eye movement is tracked. We are planning to include 20 participants per group and examine both first fixations and dwell time for faces and hands and compare these measures between the two groups. In addition to the eye-tracking experiment, participants also complete several face discrimination tasks, allowing us to test if face recognition performance is linked to eye gaze behavior towards faces.

Data acquisition for this project is ongoing. With this experiment we hope to gain a deeper insight into how sign language changes visual perception and the plasticity of the visual system.

Bettger, J. G., Emmorey, K., McCullough, S. H., & Bellugi, U. (1997). Enhanced facial discrimination: Effects of experience with American Sign Language. Journal of deaf studies and deaf education, 223-233.

Stoll, C., Palluel-Germain, R., Gueriot, F. X., Chiquet, C., Pascalis, O., & Aptel, F. (2018). Visual field plasticity in hearing users of sign language. Vision research, 153, 105-110.

Xu, J., Jiang, M., Wang, S., Kankanhalli, M. S., & Zhao, Q. (2014). Predicting human gaze beyond pixels. Journal of vision, 14(1), 28-28.

Christina Steele

PhD student

Harvard University

Abstract: One of my goals is to develop the practical expertise in the technologies used to gain insight into the minds of primates. I am a PhD student in developmental psychology working with Professor Ashley Thomas at Harvard University on whether human infants represent their social worlds. We are currently investigating whether infants represent the relationships that are most important to them–i.e., caregiving relationships. My long-term goal is to understand the developmental and evolutionary origins of our knowledge of social relationships. A next step is to investigate the phylogenetic roots of these abilities. To achieve this goal, I plan to investigate whether humans share these abilities with our nearest relatives: chimpanzees and bonobos. I currently do not have experience with eye-tracking: either for non-human primates or human infants. Thus far, I have coded infant looking behavior manually. This workshop will therefore be instrumental in expanding the methods I will be able to use in my research (e.g., noninvasive, restraint-free eye-tracking; analyzing data in Python and ML approaches). In doing so, I will be able to address my research questions using a variety of measures (e.g., hand-reaching behaviors via motion-tracking). The workshop also offers an opportunity to learn directly from and establish relationships with people with expertise in comparative research who I otherwise would not have the ability to meet regularly, including the conference organizers and the other attendees. It will be an opportunity to build long-term connections and establish collaborations. Additionally, the opportunity to present my research at the poster session will provide the chance to get feedback in early stages of research from people with different training and theoretical perspectives than my current mentors. As a Black woman and the first and only one in my family to pursue a PhD, this workshop will be an essential step toward my long-term goal of becoming a professor. Pursuing a PhD, and doing so successfully, will be a significant turning point in my family’s lack of access to higher education. Finally, given the dearth of Black, Indigenous, People of Color (BIPOC) scholars represented in the cognitive sciences, becoming a professor is an important step in addressing past injustices toward BIPOC scholars in academia and increasing representation of Black voices.

Nicole Furgala

PhD student

Emory University

Abstract: Emergence and variation of behavioral milestones in chimpanzees (Pan troglodytes schweinfurthii) at Gombe National Park, Tanzania

Nicole Furgala1, Margaret Stanton2, Carson Murray3, Elizabeth Lonsdorf1

1Department of Anthropology, Emory University

2School of Social and Behavioral Sciences, University of New England

3Department of Anthropology, The George Washington University

Chimpanzees exhibit extended infant and juvenile periods in which species and sex-typical skills are developed. Previous studies of behavioral development in wild chimpanzees have shown sex differences in time allocated to different behaviors, but less is known about other potential sources of variation, such as being a first- or later-born offspring. Using long-term data (1976-2018) from Gombe National Park, Tanzania, we identified the age of first observed occurrence across fourteen developmental milestones including social, spatial independence, technical, and vocal behaviors. The distribution of milestone acquisition indicated that simple behaviors were reached earlier, while more complex milestones were achieved later and showed more variability in first emergence. For example, the age of first occurrence of social milestones increased with social complexity (self-play mean = 0.41 yrs; social play mean = 1.16 yrs; nonfamily social play mean = 1.46 yrs). Later-born chimpanzees played for the first time earlier than firstborns (p = 0.04, CI: -0.62, -0.02), suggesting that having a sibling facilitated the emergence of social skills. However, no sex differences were found for age of first emergence in the targeted behaviors, suggesting that sex biases in behavioral development may be a result of time allocation once the milestone has been achieved. Investigating sources of variation is critical for understanding the socio-ecological factors shaping variability in developmental trajectories, behavioral outcomes, and skill acquisition among chimpanzees.

Matthew Babb

PhD student

Georgia State University

Abstract: Non-human primates naturally engage in prosocial behavior, but such behavior is inconsistently seen in experimental contexts. One important factor may be social relationships; however this is challenging to study experimentally because most studies involve pre-determined dyads, which limits relationships and is not representative of natural interactions. To test the influence of relationships, we gave four social groups of capuchin monkeys (Sapajus [Cebus] apella) an apparatus that one could activate to provide juice to another in their group, allowing for subject-driven partner choice. Capuchins provided juice more often when a group member benefitted than in controls in which juice was unavailable (the dispenser was outside the enclosure; b=-0.52;SE=0.18;p=0.004), suggesting that they understood the contingencies of the task. Not surprisingly, dominant monkeys interacted with the apparatus more than subordinates (b=1.23;SE=0.26;p<0.001). Relationship quality, which was measured using a composite sociality index based off separately collected observations of grooming and contact, also influenced choices, with capuchins making prosocial choices more often for closer associates (b=0. 68;SE=0.09;p<0.001). Finally, to see whether oxytocin influenced decision-making, we induced endogenous oxytocin through fur-rubbing. Capuchins were more prosocial after endogenous oxytocin release than after control sessions (b=0.21;SE=0.10;p=0.038), but this did not differ across relationships. Thus, relationships do influence capuchins’ prosocial decisions, but increased oxytocin, while increasing prosocial behavior overall, does not interact with subjects’ relationship quality.

Ying Zeng

PhD student

Indiana University (Incoming)

Abstract: During referential intentional communication, the continuous alternation of gaze between a communicative partner and a specific object or point of interest attracts the partner’s attention towards the target. This behaviour is considered by many as essential for understanding intentions, and is thought to involve mental planning. We investigated the behavioural responses of seven bottlenose dolphins (Tursiops truncatus) that were given an impossible task in the presence of two experimenters, in which they were commanded to retrieve an object that was impossible to retrieve.

The dolphins were asked to retrieve an object from the other side of the pool by the ‘commanding experimenter’. Another experimenter, the ‘non-commanding experimenter’ was also present but did not give any commands, and so can be thought of as not relevant to the retrieval task. In the control condition, after the commanding experimenter had given the retrieval command, both commanding experimenter and non-commanding experimenter continued facing the dolphin subject whilst it attempted to complete the task. In the experimental condition, once the command was given by the commanding experimenter, he would turn around for the rest of the trial, whilst the non-commanding experimenter remained facing the dolphin.

We found that the dolphins spontaneously displayed gaze alternation, only when the human commanding experimenter was facing them. However, they ceased to alternate their gaze between the impossible object and the commanding experimenter when the commanding experimenter had their back turned. Notably, the dolphins' behaviour differed from general pointing and gaze, as their triadic gaze sequence required a change of body orientation to inspect the impossible object and back to the commanding experimenter that occurred within a narrow time window(<9.756s). These findings suggest that the dolphins were sensitive to human attentional cues and utilized their own gaze cue (pointing) as a salient signal to attract the attention of the commanding experimenter towards a specific location. It is possible that the bottlenose dolphins’ referential communicative acts arise from their understanding of the functional significance of head/body orientation as a consequence of their sensitivity to the head/body orientation of conspecifics when echolating as observed in the wild.

Abdulganiyu Jimoh

Graduate Visiting Research Student (MSc)

Mohammed VI Polytechnic University, Morocco

Abstract: The project uses a dataset of apes in the zoo that was separately annotated for segmentation and classification purposes. In the machine learning model, segmentation of metallic mesh from thermal imaging superposed with mesh was achieved, and segmentation of thermal imaging from metallic mesh was achieved from imaging containing ape and metalic meshes, leveraging machine learning, computer vision, and image processing techniques.

Inna Livytska

PostDoc

RWTH Aachen